The discussion around Windows or Linux can be religious. As can be the discussion around which platform to use whether it be Intel or AMD.

Qualifier: We’ve been in the System Builder Channel since the late 1990s.

Intel System Builder

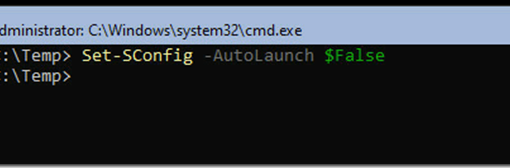

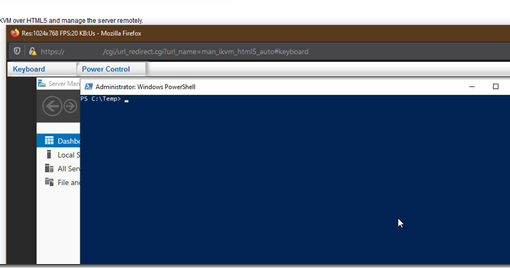

When we started MPECS in 2003 we were an all Intel House and one of the first in the world to build a Hyper-V Cluster on the IMS (Intel Modular Server – our YouTube video of the IMS) using Windows Server Core to boot which was 6 months of pain. Very little PowerShell lots of CMD and Registry edits. We worked with both the Intel IMS and PCSD (PC Client Solutions) Engineering Team but also key Hyper-V folks in Microsoft to make it happen.

AMD Opteron or Intel Xeon

At that time AMD Opteron sucked for performance. The AMD ODM (Original Design Manufacturing) ecosystem sucked even more. Having five different points of contact for support was painful never mind the possible blame games. Intel’s Intel Partner Program with their support crew in Costa Rica (got to know quite a few of them along with the crew that designed their systems) was second to none. So, we stuck with them.

In the early days of Azure we could set up the identical VM on Xeon and Opteron and we’d see a 40% disparity between them. Intel was the clear winner.

AMD EPYC Naples

When AMD released EPYC Naples (7001) we took notice but with caution. The NUMA (Non-Uniform Memory Access) boundaries were nuts so we stayed away. That being said, the performance numbers and core count per socket were something to pay attention to.

The key stat in all of this is performance per watt. Less watts per Performance Unit means a lower power bill all around. We noticed a huge step down in power with Intel’s introduction of Core technology into Xeon (Nehalem IIRC). Our 800 watt units dropped to under 400 watts idling along at around 200 watts.

We didn’t heat our shop for over 2 years pulling the hot side of our server closet into our HVAC system prior to Core/Nehalem.

IMNSHO, Intel got complacent. Opteron was a wash, Naples was not a wash but still was painful to work with in extremely dense virtualization situations.

AMD EPYC Rome

Then came EPYC Rome.

We signed as many AMD ODM NDAs (Non-Disclosure Agreements) as we could get our hands on to get into the back room with them. It was obvious to us, at that time, that AMD had not only hit it out of the park but, The Babe.

As much as there’s loyalty to Intel out there, their PCSD products have been virtually rock solid for us, having racks and racks of servers out of loyalty bleeding dollars on performance per watt just doesn’t cut it.

With the introduction of EPYC Genoa at 96 physical cores per socket and gobs of RAM along with PCIe Gen5 to ease the bus bottleneck the writing was on the wall. Well, realistically, it was on the wall when EPYC Rome was announced and AMD made it clear that the NUMA boundary problem was history never mind the indicated Performance Unit per Watt.

Intel Partner Program Bellwether

Intel’s Partner Program has always been the bellwether for us. We could tell when things were good and when things were not so good. Well, the Program, and support, have been going downhill since AMD EPYC Rome. :0(

AMD EPYC Platforms Including Dell

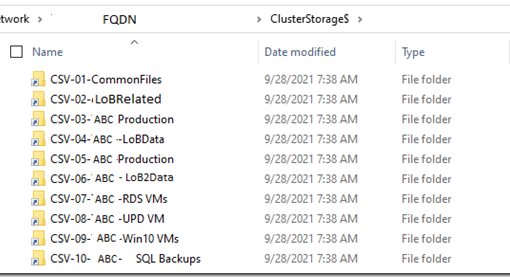

For us, we have had EPYC platforms in standalone, clustered shared SAS, and S2D environments since Rome. We spent a lot of money on a few different ODM’s Rome platforms and server boards building out labs. We also dove into Mellanox (NVIDIA) to deploy RoCE (RDMA over Converged Ethernet) based switches and network adapters both for our original S2D (Storage Spaces Direct) design on Intel in 2015/2016 and then again for our AMD EPYC Rome based S2D platform in 2018/2019.

For Tier 1, Dell has done well in the AMD EPYC space. We can flip a cable to expose 160 PCIe lanes to peripherals. This removes a pair of CPU interconnects in a dual socket setting which in many cases are not needed. Those extra PCIe lanes give a full PCIe 4x lanes per U.2/U.3 bay in a 24 bay 2U platform. That’s pretty awesome. No PCIe retimers/PCIe switches needed. So, full bandwidth. Again, the bus becomes the constraint as the CPU is more than capable of handling all of that volume.

Intel Die Shrink Issues

As an aside, Intel has been having issues with their monolithic die production for a number of generations. We could see that with each die shrink it took them longer and longer to get into production.

AMD watched all of that pain and went modular. This was another reason that it was obvious to us that AMD was going to kill it once they got passed the NUMA problem.

Note that there aren’t too many absolute WOW! moments in tech anymore. The step noted above to Nehalem and Core was significant. The change was very noticeable. Performance Unit to Watt doubled overnight.

AMD EPYC Rome and its subsequent iterations have been nothing short of show stopping.

Oh, and we can deploy those ultra-high TDP (Thermal Design Power) AMD EPYC CPUs air cooled so long as intake temperatures stay below 34C.

Intel’s End of an Era and a Thanks!

And yeah, we’ve been so busy that we’ve been out of the loop.

- Intel Exiting the Server Business Selling to MiTAC (Serve the Home)

Thank you to all of the Intel folks we’ve worked with in the Intel System Builder ecosystem. From engineers to the support folks in Costa Rica and other countries that we have managed to get to know over the years a big thank you!

Philip Elder

Microsoft High Availability MVP

MPECS Inc.

Our Web Site

PowerShell and CMD Guides