A conversation on the S2D Slack channel brought about a realization that we are on the cusp of one of the bigger technology shifts in the CPU industry in a very long time.

Since Intel incorporated Core into their Intel Xeon line many, many years ago where we saw a significant performance per watt increase things have been relatively stable with incremental changes over successive CPU generations.

The previous AMD Opteron server platform was pretty much a bust.

When we ran our workloads in Azure on both AMD Opteron and Intel Xeon based virtual machines (VMs) the difference in performance was substantial with Intel taking the win with ease.

It’s been no secret to us here that we’ve been straddling the PCI Express (PCIe) third generation (Gen3) bandwidth restriction for quite a few years now.

Some vendors managed to get around the single PCIe x16 slot limit by plugging a PCIe x16 daughter card cabled to the primary card into another slot in the server thus doubling x16 bandwidth but at significant cost in available PCIe slots.

There’s nothing more exciting than purchasing that dual-port 100GbE Mellanox RoCE RDMA NIC only to find out that one port operates at a partial throughput rate due to PCIe Gen3 limitation.

AMD EPYC Naples and Rome

AMD EPYC Naples, the first generation EPYC, dealt with the PCIe Gen3 limitation using a Band-Aid approach by making more PCIe Gen3 lanes available via each processor. But, we still had the PCIe Gen3 bandwidth limitations to deal with.

With the soon to be widely available AMD EPYC Rome CPU, the line’s second generation, we have what potentially amounts to a substantial feature set improvement in the CPU market.

PCIe Gen4

The first, is the new processor is first to market with PCIe fourth generation (Gen4) lanes. This offers a doubling of bandwidth over current PCIe Gen3. This improvement is enough on its own to attract our attention.

We have mentioned in the past that our preference was to skip PCIe Gen4 and go to Gen5 right away. Storage technology and its resulting performance increases have been substantial over the last three to five years. With the bandwidth increase we will see in PCIe Gen4 it still won’t be difficult to saturate that bus when all-flash NVMe and at least a pair of 100GbE dual-port NICs are installed.

It’s important to note that in an AMD dual socket setup half of the processor’s lanes are used to communicate between the CPUs other leaving 128 lanes for peripherals. With the Rome PCIe Gen4 setup there would be an option to using three PCIe Gen4 x16 paths between processors instead of the specified four PCIe x16 paths per CPU. That would mean there would be 160 PCIe lanes available for peripherals versus the in-specification 128 lanes (64 lanes per CPU). A caveat though, would be twofold:

- System Board manufacturers would need to make the necessary modifications at that level

- Bandwidth between two CPUs would be the same as Naples, which is okay, but may be a limitation in CPU intensive environments

In a single socket solution most, if not all, of the PCIe lanes would be available for on-board peripherals and PCIe slots. For EPYC Rome, that means significant bandwidth for our smaller S2D solutions. If possible, the best CPU would be an 8 core ultra-high GHz model.

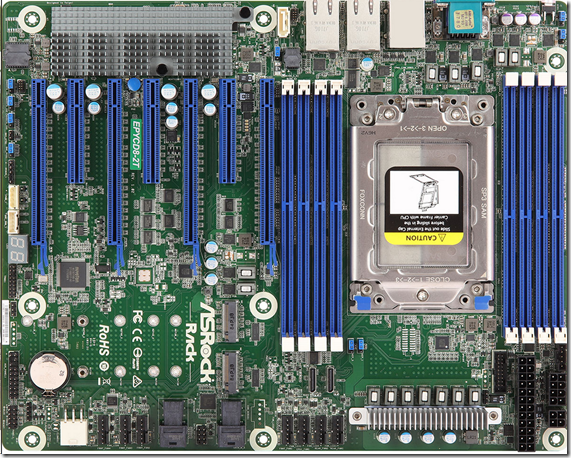

ASRock Rack EPYCD8-2T AMD EPYC Naples ATX Board with 3x PCIe x16 wired lanes

Memory Changes

The second, a slight edge to the AMD EPYC platform is the extra pair of memory channels. That is 8 channels to Intel’s 6. That means that we can set up a single socket with up to 2TB of RAM when all eight channels have a secondary DIMM slot available. For companies like those that host tenant workloads having more RAM means more room for tenants. That translates very quickly to increases in revenue streams.

It has yet to be seen if a fully configured single socket with 2TB or dual socket with 4TB would hit a bottleneck at the CPU or the PCIe bus. One day we hope to test these limits. 😉

Now, all of the above being said, Intel still has a bit of an edge on the memory side of things with Intel Optane DC Persistent Memory (pMEM). Available in up to 512GB DIMMs, an Intel system could be configured with 2TB or more Optane DC pMEM DIMMs allowing for a huge performance increase for that system. Online transaction sensitive applications need-only apply. 😉

Storage Impact

Hopefully, the improved PCIe Gen4 bandwidth and lane count situation will translate to 1U and 2U single socket platforms that allow us more dedicated NVMe PCIe lanes without the need for a PCIe NVMe switch.

That means, more NVMe throughput and IOPS available for workloads with the high performance network fabric to move those bits around.

PCI Express Performance

As an FYI, here are PCI Express numbers at a glance:

- PCIe Gen3 <– 2019-06-14 Intel as of this writing

- x1 Lane: 985MB/Second

- x16 Lanes: 15.75GB/Second

- PCIe Gen4 <– 2019-06-14 AMD EPYC Rome as of this writing

- x1 Lane: 1.969GB/Second

- x16 Lane 31.51GB/Second

- PCIe Gen5

- x1 Lane: 3.938GB/Second

- x16 Lane: 63.01GB/Second

AMD EPYC Rome and S2D

Where we see EPYC Rome fitting in, at least initially, is in the single socket Storage Spaces Direct (S2D) 1U, 2U, and 4U disaggregate solutions. Most of the bandwidth need is in the PCIe bus not memory. Even then, for storage solutions where a memory cache is needed the 2TB upper limit for a single socket makes for lots of room to work with.

In a Hyper-Converged solution we would move to 16 cores with 1TB of RAM. Our aim would be to set up the system with a higher clock speed CPU versus core depth since most of the common virtual machine workloads are 2-4 virtual CPUs. AMD EPYC Rome gives us the ability to bump up the core density all without the need to add the cost of a dual socket setup.

A few directions we could go in with an AMD EPYC Rome platform:

- S2D Scale-Out File Server Cluster

- 2-16 nodes

- AMD EPYC Rome 8-16 Cores

- 256GB ECC

- (2) Mellanox ConnectX-4 LX Dual-Port 25GbE PCie Gen3

- Or for a faster fabric

- (2) Mellanox ConnectX-5/6 Dual-Port 100GbE PCie Gen4

- Offering 400Gbps of RoCE (RDMA over Converged Ethernet) ultra-low latency goodness 🙂

- (2) 128GB M.2 NVMe RAID 1 for OS

- (32) or (64) 32TB NVMe Rulers (Capacity)

- (4) or (8) 8TB PCIe Gen4 NVMe Add-In Cards (Cache) at 5 DWPD+ (Drive Writes Per Day)

- Count would depend on storage needs

- Hyper-V Computer Cluster

- 2U x 4 Node Platform per node

- (2) AMD EPYC Rome 8-32 Core

- 1TB or 2TB ECC

- (1) Mellanox ConnectX-4 LX Dual-Port 25GbE PCie Gen3

- Or

- (1) Mellanox ConnectX-5/6 Dual-Port 100GbE PCie Gen4

- If we can squeeze two in we would!

- (2) 128GB M.2 NVMe RAID 1 for OS

Of note, Mellanox has current network card offerings in PCIe Gen4 flavours giving us access to available bandwidth on all ports.

Conclusion

The obvious conclusion is that AMD has caught Intel completely off-guard to put it politely. So long as AMD can get product into the market, and soon, they should make a significant dent into Intel’s sales before their own PCIe Gen4 product gets released sometime late next year or the following year 2020.

We are certainly hoping that we can put together a reasonably priced S2D solution set on AMD EPYC that would give us dual socket performance and I/O without the dual socket price. That is, after all, one of AMD’s primary marketing pushes for the platform.

Something important to note here. AMD has pretty much broke the news about the EPYC Rome processor in recent weeks and/or months. Now, what we need to see is product in the channel . . . and soon!

Whether the release is truly EPYC has yet to be seen. 😉

Have a great weekend everyone!

Philip Elder

Microsoft High Availability MVP

MPECS Inc.

Co-Author: SBS 2008 Blueprint Book

www.s2d.rocks !

Our Web Site

Our Cloud Service